Testing metadata, reproducing results, and training students for synthesis science

In February, 2011, Victoria C. Stodden (Columbia University) organized a symposium at the American Association for the Advancement of Science (AAAS) Conference that addressed Reproducibility and Interdisciplinary Knowledge Transfer (see http://aaas.confex.com/aaas/2011/webprogram/Session3166.html). That symposium dealt primarily with computational results, but her contention that the difficulty verifying published research results might be “leading to a credibility crisis affecting many scientific fields” sparked a debate on whether ecology data archives could be used to verify research results, and if so, what methods would be most effective for accomplishing this.

We therefore launched an educational experiment during the Spring 2011 Masters in Environmental Studies (MES) course in quantitative methods, in which we asked our 27 students to work in teams of 2-3 to conduct an analysis of one or more data sets from the H.J. Andrews Experimental Forest, using metadata and published results to guide that analysis. Students were free to conduct “new” research, or to try to reproduce reported results. Other educational and research objectives motivating this experiment were:

- Quantitative methods and statistics texts typically focus on concepts, and textbook data (rightfully so) are usually shortened, simplified, and sanitized. As a result, students rarely leave the course with a good understanding of how to use, manage, validate, or document large data setsa skill needed when they conduct their own research. We wanted to provide realistic experiences with large data sets.

- We wanted to train students to integrate data and conduct synthesis science.

- We wanted to accustom future researchers to using data archives, a skill that will be increasingly important as the cost of collecting data rises, and more ecological observatories come on board.

- We wanted to gauge the usefulness of existing metadata in helping scientists use archived data, and determine which metadata are the most useful and where are the stumbling blocks to data reuse.

The project was preceded by a field trip to the HJ Andrews (AND) Long Term Ecological Research (LTER) site, where students toured the site, conducted field measurements, and articulated their proposals to the site manager, who provided some advice on whether the proposed datasets were appropriate. The students organized themselves into 12 teams, and completed statistical analysis projects using 18 AND data sets. Students were asked to articulate the process they used to manage the data, what they learned about the analysis process and about statistics, what they found most difficult, and suggestions to improve the metadata.

Student comments about AND metadata were generally positive, and the most common observations were that the metadata were comprehensive and clean, but that they needed improved geolocation (preferably maps with metadata) and consistent transect names across publications. Students also reported a need for more information about methods, e.g., why certain protocols were used and why all data were not measured across all plots, and better definitions and descriptions of (or universal) categorical data and variable names. Reported less often but no less interesting metadata desiderata included: Which statistical tests were run and with what results, links to supporting articles, and less discipline-specific metadata. One group found that some metadata were misleading and that their analysis showed different results than the metadata suggested.

Students generally reported that the experiment was a worthwhile learning experience, and that analyzing a real data set and doing useful work for AND were also valuable. Most difficult for them were the project requirement to conduct analysis using R statistical package , organizing the data, creating a research question and refining hypotheses, using their own research question but other people’s data, and deciding which statistical test to use. Less often students cited as difficulties to overcome the interpretation of data transformations and statistical results, their lack of background in the field, extracting historical data from the literature, and knowing when to use MS Excel or R.

The faculty involved in this project welcome the collaboration of other educators interested in organizing similar educational experiences, and would be happy to share materials they used. More information about the experiment can be obtained by contacting Judy Cushing (judyc@evergreen.edu) or at the course web site http://blogs.evergreen.edu/qqmethods/docs/ .

The authors thank Mark Schulze, Forest Director, H.J. Andrews Experimental Forest, for his extensive help to students and faculty in setting up and conducting this project, AND Principal Investigator Barbara Bond for her encouragement and enthusiasm for the Evergreen MES Students’ work at AND, and Information Manager Suzanne Remillard for advice on AND metadata and website.

We gratefully acknowledge the students who participated in this program, and worked very hard on their data analysis projects:

- Timothy Benedict

- Jason Cornell

- Eryn Farkas

- Lola Flores

- Lucy Gelderloos

- Erin Hanlon

- Evan Hayduk

- Jennie Husby

- David Kangiser

- Heather Kowalewski

- Ryan Kruse

- Jason Lim

- Evan Mangold

- Zachary Maskin

- Melissa Pico

- Jesse Price

- Matthew Ritter

- Kari Schoenberg

- Gregory Schultz

- Allison Smith

- Garrett Starks

- Scott Stavely

- Jerilyn Walley

- Sarah Weber

- Marisa Whisman

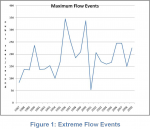

We acknowledge also Tim Benedict’s photographs, and figures from Evan Hayduk and Ryan Kruse.

Enlarge this image

Enlarge this image